SEO Guide For Developers

It is not the duty of developers to do SEO, instead, their primary duty is to ensure that websites are SEO-ready. What that means is that SEO and what it entails is controlled by developers. The influence that developers have over SEO can be summarized into three things: Visibility, Viability, as well as Site flexibility.

A developer is responsible for connecting the site to a database, and utilizing the provided designs to build pages. A developer, however, isn’t responsible for the design and writing of content.

Viability

Viability includes all the works carried out on a server and in the early stages of software configuration that prepares a site for ongoing SEO.

Generate And Store HTTP Server Logs

Although most servers are correctly set up, you should ensure log file includes the following:

- The referring domain and URL, date, response code, time, user agent, requested resources, file size and also a request type.

- It should contain an IP address.

- Relevant errors.

Alongside these, you should also ensure that:

- The server doesn’t erase the log file. At a certain point, someone will need to conduct a year-over-year analysis. That particular analysis wouldn’t be possible if you have a server that erases log files every 72 hours. It is advisable to archive them instead. Alternatively, you can mandate the SEO team to pay for an Amazon Glacier account if the file logs are huge.

- Logs can be retrieved with ease. Ensure to make it easy and convenient for you and the whole of your development team to be able to retrieve HTTP logs. It saves you a lot of time.

Configure Analytics

- It is able to track on site. A lot of visitors finds that little magnifying glass in your site handy. So it is important to always review the onsite query data.

- Also, ensure it has the ability to track across domains and subdomains. In a situation where your company operates multiple domains or they splits contents across sub-domains, you can be able to track online visitors. This will enable your SEO team to be able to detect how traffic flows from one site to the other naturally.

- Filter based on IP. An example of a filter that will come in handy for your SEO is Users in my Office. Do ensure you set it up.

- Track events happening on a page.

Consider Robots.txt

The duty of the meta robot tag is to tell bots not to index a page, or in other situations, not to follow links from that page. As soon as you launch the site, always have it in mind to remove the robots disallow as well as noindex meta tags.

Set The Correct Response Codes

Some of the right response codes include the following:

301: the resource you requested no longer exists. Proof. Look at this one instead.

302: the resource you requested is currently not available but may be available soon. Look at the other one for now.

40x: the resources you requested cannot be found.

50x: nothing is working. Check back later.

It should be noted that some servers use 200 or 30x to indicate responses for missing resources. Some carts are programmed to deliver a 200 response to indicate a broken link as well as missing resources.

When you visit a site and make efforts to load a missing page, a server delivers a 200, which means ‘OK’ and keep you on that page instead of a 404 response.

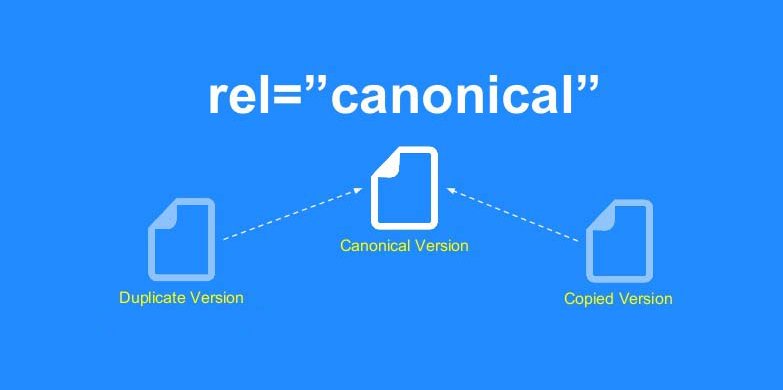

When a page delivers a message reading ‘Page not found’, crawlers will then index every instance of that message thus creating massive duplication. Which later turns out to be a Canonicalization issue but it usually begins as a response code problem.

Configuring Headers

Ensure you have a plan that covers possible outcomes and configure the headers before launching. It should include:

- Last-modified

- Rel canonical

- Hreflang

- X-Robots-Tag

Other Random Things You Should Consider

Viability

The importance of viability is that it helps to combat any blockages so that your SEO can work seamlessly.

Visibility

The ranking of your site on a search engine depends on how it is built. It impacts the search engine’s ability to find, crawl, as well as index content.

Canonicalization

Conclusion

Ensure you do not utilize query attributes to tag as well as track navigation. If you have three different links to /foo.html for instance and you desire to track them, you can look for the attribute that is contained in your analytics and find our what link gets the most click.

You can also utilize a tool like Hotjar which helps to record where people click, the generate the scroll, click and as well as records the heat map of your page.